The 10 most important AI topics to learn in 2025 are: Agentic AI, multimodal/video models, small/specialized models and edge AI, enterprise AI platforms and grounding (RAG), AI governance and regulation, AI-augmented development (copilots), AI engineering and evaluation (MLOps/LLMOps), AI for science and healthcare, efficient compute and custom silicon, and embodied AI/robotics. Prioritize hands-on familiarity with Agentic AI, Multimodal AI, and Edge AI, as these underpin many enterprise and product roadmaps this year.

Agentic AI

What it is

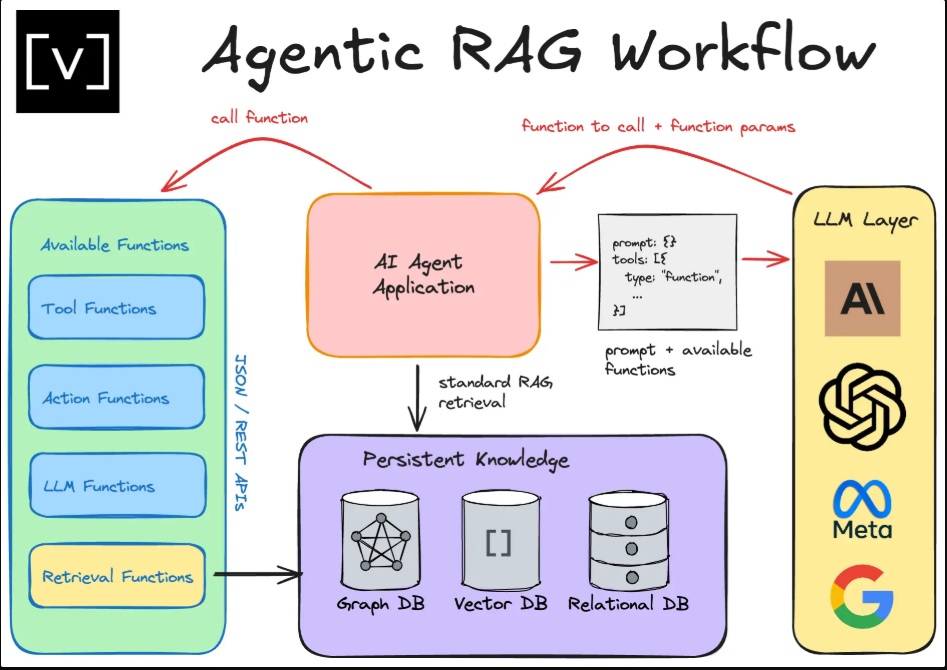

Agentic AI systems combine reasoning, planning, memory, and tool use to autonomously execute multi‑step tasks, moving beyond chat into workflow orchestration and decision support across business functions. 2025 roadmaps from major vendors and analysts highlight agents as a core shift in how people and software collaborate, not just an interface upgrade. These systems often coordinate other models, services, and data sources, acting as virtual coworkers with bounded autonomy and auditable traces.

Why it matters in 2025

Enterprises are prioritizing AI platforms that safely deliver performance, profitability, and security, with agentic patterns central to ROI narratives this year. Analysts forecast rapid expansion in agentic customer service and operations, with autonomy bounded by oversight to mitigate failure modes and reputational risk. Organizations are designing human‑in‑the‑loop gates, memory scopes, and delegation policies to align agents with enterprise controls.

Core capabilities to learn

- Task decomposition, tool calling, and retrieval‑augmented grounding to keep outputs accurate and traceable in dynamic environments.

- Long‑context memory design, episodic vs. semantic memory trade‑offs, and safe persistence policies for auditability and privacy.

- Agent evaluation: goal completion, latency/cost budgets, side‑effect minimization, and red‑teaming workflows under realistic constraints.

Architecture patterns

Multi‑agent systems coordinate specialists (planner, executor, critic) with shared memory and governance hooks for approvals, observability, and rollback. Orchestration frameworks integrate APIs, knowledge graphs, and RAG pipelines for grounding, coupled with policy engines to enforce safety and compliance. Telemetry layers measure utility, cost, and risk per task to guide continuous improvement and budget control at scale.

Risks and governance

Common risks include hallucinated actions, tool misuse, data exfiltration, prompt injection, and escalating autonomy without safeguards. Governance guidance stresses executable policies, least‑privilege tool access, and objective evaluations tied to business KPIs and incident response playbooks. Adoption data suggests substantial experimentation paired with caution around scale‑up, reflecting both promise and operational risk.

Practical starter projects

- Customer service triage agent with bounded actions, retrieval grounding, and supervisor approval for irreversible steps.

- IT operations copilot to analyze logs, propose fixes, and open tickets with auditable automation and rollback.

- Finance or HR workflow agent that drafts, checks, and routes documents with policy‑aware tool use and human sign‑off.

Multimodal and video models

What it is

Multimodal models natively reason over text, images, audio, and video, enabling richer context, more grounded outputs, and broader automation across digital and physical tasks. By early 2025, leading foundation models added advanced reasoning and multimodal I/O, improving long‑context coherence and real‑time data integration for complex workflows. This shift expands use cases from enterprise search and CX to inspections, analytics, and assistive interfaces across industries.

Why it matters in 2025

As agents get more capable, multimodality becomes the substrate for perceiving, planning, and acting in richer environments—including video‑first processes like monitoring, training, and quality assurance. Reports emphasize rapid advances in video generation and analysis, alongside new estimates for inference costs that shape deployment choices and UX design. Multimodal enterprise search and assistants are also a priority for productivity and knowledge access.

Core capabilities to learn

- Multimodal prompting and grounding, including image‑aware RAG, structured extraction, and caption‑to‑action pipelines.

- Video understanding tasks—temporal grounding, event detection, and summarization—for operations and safety analytics.

- Latency and cost optimization via model selection, resolution control, and on‑device pre‑processing for scalable experiences.

Architecture patterns

Pipelines increasingly combine perception models with task‑specialist LLMs and retrieval layers, binding outputs to enterprise data for reliability. Tool‑use orchestration enables “see‑think‑act” loops: analyze media, hypothesize, query sources, and execute actions with guardrails. Observability includes content provenance, safety filters, and resource controls for high‑volume workloads like contact centers and operations.

Risks and governance

Unique risks include deepfakes, misinterpretation of context, and biased perception across demographics and environments. Controls span content authentication, policy prompts, domain‑specific evaluation sets, and human oversight for high‑impact decisions. Regulatory expectations increasingly include transparency and documentation for data sources and model behavior in sensitive sectors.

Practical starter projects

- Multimodal enterprise assistant that answers with citations, diagrams, or annotated screenshots for faster resolution.

- Video analytics for safety or compliance with event summaries, incident flags, and privacy‑aware data handling.

- Visual inspection or field‑service workflows blending image analysis, retrieval, and step‑by‑step guidance.

Small, specialized models and edge AI

What it is

Beyond frontier models, 2025 emphasizes smaller, task‑tuned models for cost, latency, and privacy benefits in production. Edge AI brings intelligent inference to devices and local servers, enabling real‑time decisions without round‑trip to cloud data centers. Market analyses project strong growth as industries adopt on‑device perception and control for responsive, resilient systems.

Why it matters in 2025

Enterprises seek a portfolio approach—frontier models for complex reasoning and compact models for high‑volume or sensitive workloads. Edge deployments cut latency and bandwidth, support offline scenarios, and improve data sovereignty, particularly in regulated and safety‑critical contexts. Hardware advances and optimized runtimes expand what’s feasible on mobile, embedded, and industrial devices.

Core capabilities to learn

- Distillation, quantization, pruning, and hardware‑friendly architectures for efficient inference under strict budgets.

- Edge pipelines: sensor fusion, streaming analytics, and event‑driven actions with local fail‑safes and fleet updates.

- Privacy and MLOps at the edge: telemetry, A/B testing, and over‑the‑air rollouts with safety checks and rollback plans.

Architecture patterns

Hybrid topologies combine on‑device pre‑processing and classification with cloud agents for heavy reasoning and orchestration. Gateways and edge servers aggregate signals and enforce policies before escalating to central services for audits or complex tasks. Model catalogs span frontier APIs and local models, routed by policy‑driven selectors for cost and performance.

Risks and governance

Edge AI increases the operational footprint for updates, monitoring, and security across diverse hardware and networks. Attack surfaces expand with physical access and supply‑chain dependencies, requiring secure boot, attestation, and key management. Responsible deployment includes fairness checks across environments, power budgets, and safe‑fail defaults.

Practical starter projects

- On‑device vision for defect detection with quantized models and local alerts, escalating uncertain cases to cloud review.

- Mobile multimodal assistant for field technicians with offline guidance and synchronized reports.

- Edge analytics for facilities monitoring with event‑driven maintenance and privacy‑preserving data retention.

Enterprise platforms and grounding (RAG)

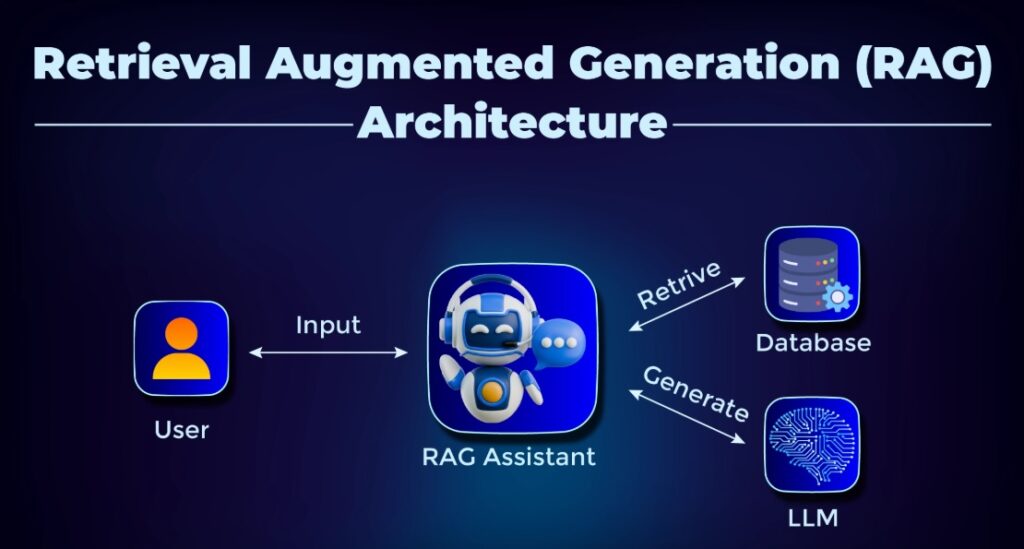

What it is

Organizations are consolidating around AI platforms that balance performance, profitability, and security while integrating internal data to improve answer quality and trust. Retrieval‑augmented generation (RAG) and other grounding techniques connect models to enterprise sources for cited, verifiable outputs. 2025 platform strategies emphasize multimodal capabilities, evaluation, and lifecycle management across cloud and edge.

Why it matters in 2025

Enterprises want measurable ROI through cost control, reliability, and integration with existing systems of record. Assistive and enterprise search use cases rank highly because grounding boosts accuracy and adoption in daily workflows. Platform alignment streamlines governance, deployment, and vendor management at scale.

Core capabilities to learn

- Data pipelines for indexing, chunking, embeddings, and metadata design that match retrieval to task intent.

- Guardrails: policy prompts, filtering, citation enforcement, and verification steps before actions or publication.

- Evaluation harnesses: offline tests and online telemetry that link quality to business KPIs and costs.

Architecture patterns

Composable stacks integrate vector stores, knowledge graphs, orchestration, and policy engines behind a unified platform experience. Agentic layers call tools, search sources, and write to systems with approvals and observability for audit and rollback. Multimodal search extends beyond text to images, audio, and video, improving discovery and instruction‑following.

Risks and governance

Grounding reduces hallucinations but does not eliminate them without careful retrieval design, freshness strategies, and output checks. Platform sprawl and unmanaged shadow tools raise risk and costs unless governed by clear standards and catalogs. Cost spikes can occur without routing, caching, and model selection aligned to task complexity.

Practical starter projects

- Cited enterprise Q&A with retrieval from curated sources, answer grading, and user feedback loops.

- Domain copilots connected to CRMs, wikis, and ticketing with permission‑aware retrieval and approval workflows.

- KPI‑linked evaluation dashboards to monitor usefulness, deflection, and cost per task.

Governance, risk, and regulation

What it is

AI governance spans organizational policies, technical controls, and regulatory compliance, with 2025 marked by concrete obligations under the EU AI Act and sectoral frameworks elsewhere. Risk management guidance from NIST’s Generative AI Profile provides technology‑specific controls for GenAI builders and deployers. Timelines now affect GPAI providers, high‑risk system operators, and national authorities establishing oversight structures.

Why it matters in 2025

The EU AI Act entered into force in 2024, with staged applicability reaching key governance provisions and GPAI obligations in August 2025, and high‑risk obligations in 2026. Mid‑2025 roundups emphasize Codes of Practice, penalties, and the AI Office establishment shaping operational expectations this year. Clear timelines help organizations prioritize controls, documentation, and design changes before enforcement.

Core capabilities to learn

- AI TRiSM: transparency, risk, security, and model governance tied to enterprise policies and audits.

- Use‑case classification and control mapping for prohibited, high‑risk, and GPAI scenarios in regulated regions.

- Incident response, content provenance, and robust evaluations to detect and mitigate misuse or harmful behavior.

Key timelines and obligations

Member States must designate authorities and set penalties by August 2, 2025, while GPAI governance and notification provisions begin applying the same day in the EU. High‑risk obligations apply from August 2026, with remaining provisions fully live by 2027, shaping vendor and deployer responsibilities. Practical guides summarize these milestones to support planning and coordination across legal and engineering teams.

Practical starter projects

- Map systems to AI Act categories, define required documentation, and implement technical controls with audits.

- Align risk registers and model cards with NIST GenAI Profile actions for training, deployment, and monitoring.

- Establish AI Office‑like governance rituals internally: change control, red‑teaming cadence, and incident reporting.

AI‑augmented development and copilots

What it is

Copilots and agentic assistants increasingly automate coding, documentation, testing, and workflow integration across the SDLC and adjacent business processes. Toolchains enable both developers and non‑technical roles to compose agents that call APIs and enterprise systems safely. This expands from code completion to orchestration and execution of real tasks under policy.

Why it matters in 2025

Organizations seek productivity gains and fewer handoffs by embedding AI into daily tools and platforms. Reports highlight improved reasoning in coding tasks and broader autonomy for routine operations, driving tangible returns when evaluated rigorously. Enterprises prioritize guardrails and evaluation to balance speed with safety and quality.

Core capabilities to learn

- Prompt engineering for code, test generation, and refactoring with repository‑aware context injection.

- CICD integration, policy gates, and environment‑aware tool use so copilots act within safe, reproducible boundaries.

- Outcome measurement: PR quality, defect rates, cycle time, and cost per unit of output to validate ROI.

Risks and governance

Risks include insecure code suggestions, license contamination, and incorrect automation of irreversible actions without review. Mitigations include vulnerability scanning, license checks, approval workflows, and least‑privilege credentials for tools. Program success depends on change management, training, and feedback loops, not just tooling.

Practical starter projects

- Org‑specific coding copilot with style guides, dependency policies, and gated merge workflows.

- DevOps assistant for incident triage, runbook generation, and safe remediation proposals.

- Cross‑functional automations: requirements drafting, test case generation, and analytics reporting with approvals.

AI engineering and evaluation (MLOps/LLMOps)

What it is

With deployments scaling, MLOps and LLMOps practices focus on evaluation, observability, cost control, and reproducibility across data, models, and agents. 2025 analyses add new visibility into inference costs, hardware, and publication trends that influence engineering trade‑offs. Organizations want systems to measure AI efficacy, not just accuracy, linking quality to business impact.

Why it matters in 2025

As agents and multimodal apps proliferate, robust evaluation harnesses and telemetry are needed to prevent regressions and cost overruns. Teams use curated and synthetic data to post‑train models while tracking shifts in behavior and bias across versions. Lifecycle governance intersects with regulation and platform strategy, making engineering discipline a differentiator.

Core capabilities to learn

- Offline evaluation suites with task‑specific rubrics, golden sets, and hallucination checks for grounded outputs.

- Online experimentation and guardrails, including anomaly detection, rate limiting, and rollback playbooks.

- Cost and latency engineering via model routing, caching, streaming, and hardware‑aware deployment choices.

Architecture patterns

Central catalogs track datasets, prompts, models, and policies with lineage and approvals. Observability pipelines capture inputs, decisions, tool calls, and outcomes for audits and root‑cause analysis. Cost dashboards attribute spend to tasks and user journeys to steer optimization work.

Practical starter projects

- Build an evaluation harness for top use cases with scenario coverage, bias checks, and budget thresholds.

- Implement prompt and retrieval versioning with A/B testing and automated regression alerts.

- Stand up a cost observability module tied to product metrics and SLAs for agent workflows.

AI for science and healthcare

What it is

AI accelerates discovery across protein dynamics, materials, and drug design while supporting clinical decision‑making, imaging, and operations in healthcare. 2025 coverage expands on scientific throughput and medical integration, reflecting maturing pipelines from lab to clinic. Vendor outlooks also flag domain‑tuned models tackling complex tasks in science and health.

Why it matters in 2025

Improved reasoning and multimodality support literature synthesis, experiment planning, and structure‑function predictions, speeding cycles in R&D. Clinical settings emphasize safety, explainability, and workflow fit alongside measurable gains in speed and accuracy for care teams. Investments in data infrastructure and compute continue to underpin progress and access.

Core capabilities to learn

- Domain‑specific retrieval and curation, linking models to validated sources and ontologies for trustworthy outputs.

- Evaluation with clinically or scientifically relevant endpoints, including uncertainty estimation and error analysis.

- Compliance and privacy engineering for sensitive data across institutions and jurisdictions.

Architecture patterns

Pipelines integrate structured biomedical knowledge with unstructured literature and lab results for grounded reasoning. Human‑in‑the‑loop review and decision support keep clinicians and scientists in control while capturing feedback for improvement. Compute and storage strategies consider confidentiality, cost, and collaboration needs at scale.

Practical starter projects

- Literature review copilots with citations and confidence, scoped to validated corpora and clinician review.

- Imaging triage assistants that flag abnormalities with explainable overlays and routing to specialists.

- Lab automation planners that propose experiments and track results under auditable protocols.

Efficient compute, custom silicon, and power

What it is

Compute and power constraints are central to AI economics, driving adoption of custom silicon, optimized data centers, and hardware‑software co‑design. Hyperscalers invest in AI‑ready capacity with specialized networking and cooling to meet demand. Sustainability commitments push toward greener infrastructure without degrading performance.

Why it matters in 2025

Enterprise AI platforms depend on predictable performance and cost curves, heavily influenced by chip choice, topology, and energy efficiency. Google and peers are expanding TPU/GPU fleets, liquid cooling, and optical switching to scale reliably and responsibly. Sustainable AI is a strategic priority, aligning innovation with environmental goals and regulatory expectations.

Core capabilities to learn

- Model‑hardware matching: throughput, memory, and interconnect considerations for training and inference.

- Data center fundamentals: power density, cooling strategies, and workload scheduling for AI clusters.

- Energy‑ and cost‑aware deployment, including quantization, sparsity, and routing for efficiency.

Architecture patterns

Hybrid compute strategies combine frontier APIs, on‑prem accelerators, and edge devices, routed by policy for cost and latency. Data pipelines co‑locate with compute to reduce movement and improve efficiency under heavy multimodal workloads. Sustainability telemetry informs placement and scheduling that balance performance with environmental impact.

Practical starter projects

- Cost/perf benchmarking across models and hardware to guide capacity planning and routing rules.

- Inference optimization playbooks: quantization and compile targets aligned to accelerator roadmaps.

- Sustainability dashboards for AI workloads tracking energy, water, and carbon metrics vs. SLAs.

Embodied AI and robotics

What it is

Embodied AI brings perception, planning, and control into physical systems—robots, drones, and autonomous machines that operate in the real world. As AI capabilities mature, robotics increasingly blends with multimodal perception and edge inference for responsive autonomy. This convergence is reshaping logistics, manufacturing, inspection, and service applications.

Why it matters in 2025

Strategic trend outlooks highlight the cross‑acceleration between AI and robotics, where improved reasoning and sensing expand feasible tasks and reliability. Edge AI unlocks low‑latency control loops and resilience, enabling local decisions even with intermittent connectivity. Organizations prioritize safety, compliance, and human‑robot collaboration patterns to scale deployments.

Core capabilities to learn

- Visual and sensor perception, SLAM, and multimodal grounding for robust operation in variable environments.

- Motion planning, task scheduling, and policy learning tuned to safety and throughput requirements.

- Systems engineering for fleets: OTA updates, telemetry, and fallback modes under hardware constraints.

Architecture patterns

Hierarchical control splits local reflexes from higher‑level planning, with cloud agents coordinating across fleets and backends. Multimodal pipelines fuse cameras, depth, and audio with domain knowledge to improve reliability and explainability. Evaluation spans sim‑to‑real testing, scenario coverage, and fail‑safe design verified against operational policies.

Practical starter projects

- Vision‑guided pick‑and‑place with on‑device inference and cloud‑based optimization loops.

- Autonomous inspection with event detection, summaries, and human review queues for anomalies.

- Mobile assistant robots in controlled environments with bounded autonomy and safety interlocks.

Putting it all together A 2025 learning roadmap that compounds quickly is: master agentic patterns, add multimodal grounding, practice evaluation/LLMOps, and deploy a small model to the edge with observability and basic governance controls. Pair hands‑on projects with platform know‑how and emerging regulatory expectations to translate skills into production‑ready value this year. Use domain‑specific problems to anchor learning, then generalize patterns across teams and toolchains for durable impact.