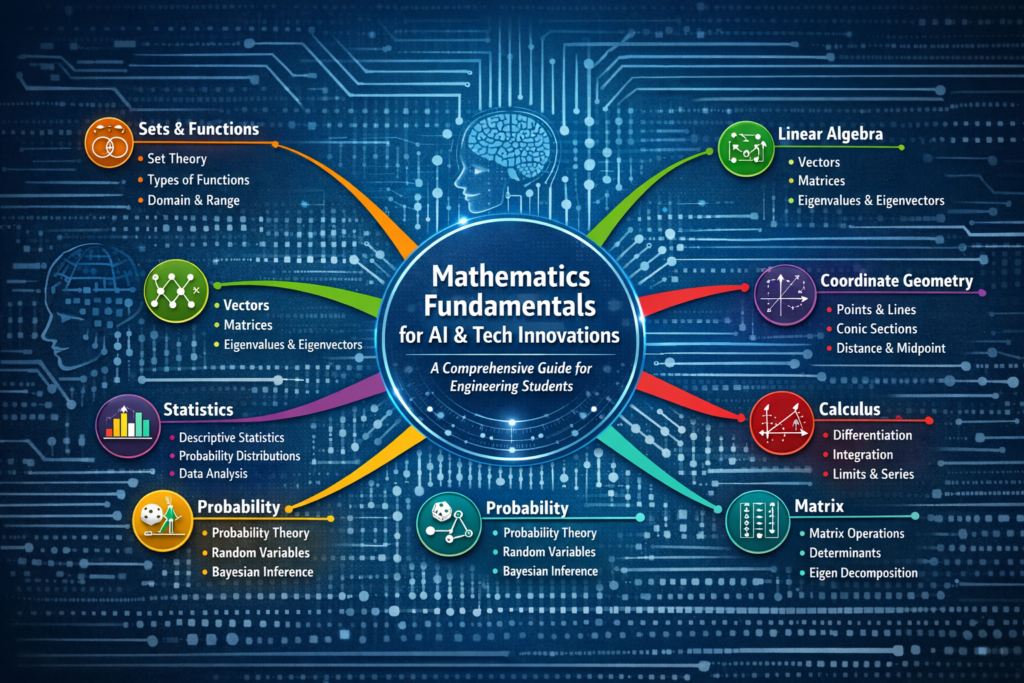

UNIT I: Sets and Functions

1. Sets – The Foundation of Mathematics

Introduction: Sets form the fundamental building blocks of modern mathematics and computer science. A set is a well-defined collection of distinct objects, which can be numbers, letters, or any mathematical entities. In AI and technology, sets are crucial for database design, data structures, and algorithm development.

Core Concepts:

Set Representations: Sets can be represented in roster form {1, 2, 3, 4} or set-builder form {x | x is a natural number less than 5}. This dual representation is essential in programming for defining data collections and constraints.

Empty Set (∅): The set containing no elements. Critical in database operations representing null results and in algorithm design for base cases.

Finite and Infinite Sets: Finite sets have countable elements {1, 2, 3}, while infinite sets like natural numbers ℕ = {1, 2, 3, …} extend indefinitely. Understanding infinity is crucial for computational complexity analysis.

Equal Sets: Sets A and B are equal if they contain exactly the same elements, regardless of order. This concept underlies data comparison algorithms.

Subsets: Set A is a subset of B (A ⊆ B) if every element of A is in B. Power set P(A) contains all possible subsets. For a set with n elements, |P(A)| = 2^n.

Intervals: Special subsets of real numbers – Open interval (a, b) = {x | a < x < b}, Closed interval [a, b] = {x | a ≤ x ≤ b}. Essential for defining ranges in optimization problems.

Key Formulas:

• Union: A ∪ B = {x | x ∈ A or x ∈ B}

• Intersection: A ∩ B = {x | x ∈ A and x ∈ B}

• Difference: A – B = {x | x ∈ A and x ∉ B}

• Complement: A’ = U – A = {x | x ∈ U and x ∉ A}

• De Morgan’s Laws: (A ∪ B)’ = A’ ∩ B’ and (A ∩ B)’ = A’ ∪ B’

• |A ∪ B| = |A| + |B| – |A ∩ B|

🔬 Real-World Applications in AI & Tech:

Machine Learning: Feature selection uses set operations to combine or exclude attributes. Training, validation, and test sets are disjoint subsets ensuring model generalization.

Database Management: SQL operations (JOIN, UNION, INTERSECT) directly implement set theory. Query optimization relies on set cardinality calculations.

Information Retrieval: Search engines use set operations for Boolean queries. Document similarity measures use set intersection (Jaccard similarity = |A ∩ B| / |A ∪ B|).

Network Security: Access control lists use set membership. Firewall rules implement set operations to filter traffic.

Set Theory Mind Map

Set Theory

Representation

Operations

Union ∪

Intersection ∩

Complement ‘

Venn Diagrams

Applications

Databases

ML Datasets

2. Relations & Functions – Mapping the Mathematical Universe

Introduction: Relations and functions are fundamental mappings between sets that describe how elements correspond to one another. In computer science and AI, functions are the core of algorithms, transformations, and computational models. Every program is essentially a function mapping inputs to outputs.

Relations:

Ordered Pairs: An ordered pair (a, b) has a first element a and second element b. Unlike sets, order matters: (2, 3) ≠ (3, 2). Essential for coordinate systems and key-value pairs in programming.

Cartesian Product: A × B = {(a, b) | a ∈ A and b ∈ B}. If |A| = m and |B| = n, then |A × B| = m × n. Forms the basis for multi-dimensional data structures and relational databases.

Relation Definition: A relation R from set A to set B is a subset of A × B. Domain = {a | (a, b) ∈ R}, Co-domain = B, Range = {b | (a, b) ∈ R for some a}. Relations model connections in graphs, networks, and databases.

Functions:

Function Definition: A function f: A → B is a special relation where each element in domain A maps to exactly one element in codomain B. This uniqueness property ensures predictable, deterministic behavior essential for computation.

Function Types: Constant function f(x) = c, Identity function f(x) = x, Polynomial f(x) = aₙxⁿ + … + a₁x + a₀, Rational f(x) = p(x)/q(x), Modulus f(x) = |x|, Signum function, Exponential f(x) = aˣ, Logarithmic f(x) = log_a(x), Greatest Integer Function f(x) = ⌊x⌋.

Function Operations:

• (f + g)(x) = f(x) + g(x)

• (f – g)(x) = f(x) – g(x)

• (f · g)(x) = f(x) · g(x)

• (f / g)(x) = f(x) / g(x), where g(x) ≠ 0

• (f ∘ g)(x) = f(g(x)) [Composition]

• Domain of f + g: D(f) ∩ D(g)

🔬 Real-World Applications in AI & Tech:

Neural Networks: Activation functions (sigmoid, ReLU, tanh) are mathematical functions transforming neuron inputs. Backpropagation uses function composition and chain rule.

Computer Graphics: Transformation functions (translation, rotation, scaling) map coordinates. Bezier curves use polynomial functions for smooth rendering.

Signal Processing: Fourier transforms decompose signals into frequency components. Filters are functions modifying signal characteristics.

Data Science: Feature engineering applies functions to transform raw data. Normalization functions (min-max, z-score) standardize datasets for ML algorithms.

3. Trigonometric Functions – The Mathematics of Oscillation

Introduction: Trigonometric functions describe periodic phenomena and circular motion. From analyzing sound waves to modeling seasonal patterns in time-series data, trigonometry is indispensable in signal processing, computer vision, robotics, and physics simulations.

Core Concepts:

Angle Measurement: Degrees (360° = full circle) and Radians (2π radians = full circle). Conversion: Radians = Degrees × (π/180), Degrees = Radians × (180/π). Radians are preferred in calculus and programming due to natural derivatives.

Unit Circle Definition: For angle θ, point P(x, y) on unit circle: cos θ = x, sin θ = y, tan θ = y/x. This geometric interpretation extends to all angles including negative and angles > 360°.

Function Properties: sin θ and cos θ have period 2π, range [-1, 1]. tan θ has period π, range (-∞, ∞), with discontinuities at odd multiples of π/2. These properties govern wave behavior.

Fundamental Identities:

• sin²x + cos²x = 1 [Pythagorean Identity]

• 1 + tan²x = sec²x

• 1 + cot²x = csc²x

Sum and Difference Formulas:

• sin(x ± y) = sin x cos y ± cos x sin y

• cos(x ± y) = cos x cos y ∓ sin x sin y

• tan(x ± y) = (tan x ± tan y) / (1 ∓ tan x tan y)

Double and Triple Angle Formulas:

• sin 2x = 2 sin x cos x

• cos 2x = cos²x – sin²x = 2cos²x – 1 = 1 – 2sin²x

• tan 2x = 2 tan x / (1 – tan²x)

• sin 3x = 3 sin x – 4 sin³x

• cos 3x = 4 cos³x – 3 cos x

• tan 3x = (3 tan x – tan³x) / (1 – 3 tan²x)

🔬 Real-World Applications in AI & Tech:

Signal Processing & Audio: Fourier analysis decomposes audio signals into sine and cosine waves. MP3 compression, noise reduction, and equalization all use trigonometric transforms. Sample audio at frequency f: s(t) = A sin(2πft + φ).

Computer Vision & Image Processing: Discrete Cosine Transform (DCT) for JPEG compression. Edge detection uses trigonometric gradients. Hough transform for line detection relies on parametric equations: ρ = x cos θ + y sin θ.

Robotics & Animation: Inverse kinematics uses trigonometry to calculate joint angles. Rotation matrices employ sin and cos for 3D transformations. Smooth motion trajectories use sinusoidal interpolation.

Machine Learning: Positional encoding in Transformers uses sine and cosine functions: PE(pos, 2i) = sin(pos/10000^(2i/d)), PE(pos, 2i+1) = cos(pos/10000^(2i/d)). This helps models understand sequence order.

UNIT II: Algebra – The Language of Abstract Mathematics

1. Complex Numbers – Extending the Number System

Introduction: Complex numbers extend real numbers by introducing i = √(-1), enabling solutions to equations like x² + 1 = 0. Critical in electrical engineering (AC circuit analysis), quantum mechanics, signal processing (Fourier transforms), and control systems. Every polynomial equation has solutions in complex numbers (Fundamental Theorem of Algebra).

Core Concepts:

Complex Number Form: z = a + bi where a is real part Re(z), b is imaginary part Im(z), and i² = -1. Complex conjugate: z̄ = a – bi. Magnitude: |z| = √(a² + b²). Argument: arg(z) = tan⁻¹(b/a).

Argand Plane: Geometric representation with real axis (horizontal) and imaginary axis (vertical). Complex number z = a + bi corresponds to point (a, b). This visualization is powerful for understanding complex operations geometrically.

Polar Form: z = r(cos θ + i sin θ) = r e^(iθ) where r = |z| and θ = arg(z). Euler’s formula: e^(iθ) = cos θ + i sin θ. This form simplifies multiplication and powers.

Operations and Properties:

• Addition: (a + bi) + (c + di) = (a + c) + (b + d)i

• Multiplication: (a + bi)(c + di) = (ac – bd) + (ad + bc)i

• Division: (a + bi)/(c + di) = [(ac + bd) + (bc – ad)i] / (c² + d²)

• De Moivre’s Theorem: (cos θ + i sin θ)ⁿ = cos(nθ) + i sin(nθ)

• z · z̄ = |z|²

• |z₁ · z₂| = |z₁| · |z₂|

🔬 Real-World Applications in AI & Tech:

Signal Processing: Fast Fourier Transform (FFT) uses complex exponentials to analyze frequency components. Communications systems encode information in phase and amplitude of complex signals.

Quantum Computing: Quantum states are represented as complex vectors. Quantum gates are unitary matrices with complex entries. Superposition exploits complex probability amplitudes.

Control Systems: Transfer functions H(s) use complex variable s = σ + jω. Stability analysis examines pole locations in complex plane. Bode plots visualize frequency response.

2. Linear Inequalities – Defining Feasible Regions

Introduction: Linear inequalities define ranges and constraints. Essential for optimization problems, resource allocation, and machine learning constraint satisfaction. Unlike equations with specific solutions, inequalities describe solution regions.

Standard Forms: ax + b < c, ax + b ≤ c, ax + b > c, ax + b ≥ c. Solution represented on number line. Rules: Adding/subtracting same number preserves inequality. Multiplying/dividing by positive number preserves inequality. Multiplying/dividing by negative number reverses inequality.

🔬 Applications:

Machine Learning: SVM (Support Vector Machines) use inequalities to define margin constraints: yᵢ(w·xᵢ + b) ≥ 1. Regularization adds inequality constraints to prevent overfitting.

3. Permutations and Combinations – Counting Arrangements

Introduction: Permutations and combinations are fundamental counting techniques. Permutations count ordered arrangements, combinations count unordered selections. These concepts underlie probability, algorithm analysis, and cryptography.

Core Concepts:

Fundamental Principle of Counting: If task 1 can be done in m ways and task 2 in n ways, both can be done in m × n ways. Extends to multiple tasks. Forms basis of complexity analysis in algorithms.

Factorial: n! = n × (n-1) × (n-2) × … × 2 × 1, with 0! = 1. Represents total arrangements of n distinct objects. Growth rate O(n!) makes brute-force approaches infeasible for large n.

Key Formulas:

• Permutations: ⁿPᵣ = n!/(n-r)! [Ordered arrangements of r objects from n]

• Combinations: ⁿCᵣ = n!/[r!(n-r)!] [Unordered selections of r from n]

• Relation: ⁿPᵣ = r! × ⁿCᵣ

• ⁿCᵣ = ⁿCₙ₋ᵣ [Symmetry property]

• ⁿCᵣ + ⁿCᵣ₋₁ = ⁿ⁺¹Cᵣ [Pascal’s identity]

🔬 Real-World Applications in AI & Tech:

Algorithm Analysis: Time complexity of brute-force search: O(n!). Subset generation: O(2ⁿ). Understanding combinatorial explosion guides algorithm design.

Cryptography: Key space size determines security. Password with n characters from alphabet of size m: mⁿ possibilities. RSA relies on difficulty of factoring large numbers.

Machine Learning: Feature selection: choosing k features from n total gives ⁿCₖ combinations. Cross-validation splits data in ⁿCₖ ways for k-fold validation.

Network Design: Number of possible connections in network of n nodes: ⁿC₂ = n(n-1)/2. Graph coloring and scheduling problems use combinatorial techniques.

4. Binomial Theorem – Expanding Powers

Introduction: The Binomial Theorem provides formula for expanding (a + b)ⁿ without multiplying repeatedly. Applications span probability distributions, approximations, and numerical methods.

Binomial Theorem:

(a + b)ⁿ = Σ(k=0 to n) ⁿCₖ aⁿ⁻ᵏ bᵏ

= ⁿC₀aⁿ + ⁿC₁aⁿ⁻¹b + ⁿC₂aⁿ⁻²b² + … + ⁿCₙbⁿ

Pascal’s Triangle: Each entry is sum of two entries above it.

Row n contains coefficients for (a+b)ⁿ.

Properties:

• Sum of coefficients: Put a = b = 1, get 2ⁿ

• Alternating sum: Put a = 1, b = -1, get 0

• Middle term(s) have largest coefficient

🔬 Applications:

Probability: Binomial distribution P(X = k) = ⁿCₖ pᵏ(1-p)ⁿ⁻ᵏ models number of successes in n independent trials. Used in A/B testing, quality control, and reliability engineering.

Approximations: For small x, (1 + x)ⁿ ≈ 1 + nx (linear approximation). Used in numerical methods and error analysis.

5. Sequences and Series – Patterns and Sums

Introduction: Sequences are ordered lists of numbers following a pattern. Series are sums of sequence terms. These concepts model growth patterns, convergence behavior, and infinite processes fundamental to calculus and analysis.

Arithmetic Progression (AP):

Definition: Sequence where difference between consecutive terms is constant. General term: aₙ = a + (n-1)d where a is first term, d is common difference.

• nth term: aₙ = a + (n-1)d

• Sum of n terms: Sₙ = n/2[2a + (n-1)d] = n/2(a + l) where l is last term

• Arithmetic Mean: If a, A, b are in AP, then A = (a+b)/2

Geometric Progression (GP):

Definition: Sequence where ratio between consecutive terms is constant. General term: aₙ = arⁿ⁻¹ where a is first term, r is common ratio.

• nth term: aₙ = arⁿ⁻¹

• Sum of n terms: Sₙ = a(rⁿ – 1)/(r – 1) for r ≠ 1, or Sₙ = na for r = 1

• Infinite GP sum: S∞ = a/(1-r) for |r| < 1 (converges)

• Geometric Mean: If a, G, b are in GP, then G = √(ab)

• AM ≥ GM: (a+b)/2 ≥ √(ab) with equality iff a = b

🔬 Real-World Applications in AI & Tech:

Algorithm Analysis: Geometric series appears in analyzing divide-and-conquer algorithms. Binary search complexity: T(n) = T(n/2) + O(1) leads to geometric series giving O(log n).

Computer Graphics: Antialiasing and texture mapping use geometric series. Infinite reflections in ray tracing sum contributions as geometric series.

Financial Modeling: Compound interest A = P(1 + r)ⁿ is geometric growth. Present value calculations use infinite GP for perpetuities.

Machine Learning: Learning rate decay often follows geometric progression. Exponential moving average uses geometric weighting of past values.

UNIT III: Coordinate Geometry – Mathematics Meets Space

1. Straight Lines – Linear Relationships

Introduction: Straight lines represent linear relationships between variables. Fundamental in linear regression, optimization, computer graphics, and any system with proportional relationships. The equation of a line captures rate of change (slope) and initial value (intercept).

Core Concepts:

Slope: Measure of steepness. m = (y₂ – y₁)/(x₂ – x₁) = tan θ where θ is angle with positive x-axis. Positive slope: line rises; negative slope: line falls; zero slope: horizontal; undefined slope: vertical.

Angle Between Lines: If lines have slopes m₁ and m₂, then tan θ = |(m₁ – m₂)/(1 + m₁m₂)|. Parallel lines: m₁ = m₂. Perpendicular lines: m₁ · m₂ = -1.

Forms of Line Equations:

• Slope-intercept form: y = mx + c (m = slope, c = y-intercept)

• Point-slope form: y – y₁ = m(x – x₁)

• Two-point form: (y – y₁)/(y₂ – y₁) = (x – x₁)/(x₂ – x₁)

• Intercept form: x/a + y/b = 1 (a = x-intercept, b = y-intercept)

• Normal form: x cos α + y sin α = p

• General form: Ax + By + C = 0

Distance from Point to Line:

d = |Ax₁ + By₁ + C|/√(A² + B²) for line Ax + By + C = 0 and point (x₁, y₁)

🔬 Real-World Applications in AI & Tech:

Linear Regression: Best-fit line minimizes sum of squared errors. Equation y = β₀ + β₁x models relationship between variables. Used in predictive analytics, trend analysis, and forecasting.

Computer Graphics: Line drawing algorithms (Bresenham’s) efficiently rasterize lines. Clipping algorithms determine visible line segments. Intersection calculations for collision detection.

Neural Networks: Perceptron implements linear separator: w·x + b = 0. Support Vector Machines find optimal separating hyperplane (generalized line in high dimensions).

Robotics: Path planning uses line segments. Inverse kinematics solves for joint angles using geometric line relationships.

2. Conic Sections – Curves of Nature

Introduction: Conic sections (circle, ellipse, parabola, hyperbola) arise from intersecting cone with plane. These curves appear in orbital mechanics, antenna design, optics, and optimization. Each has unique geometric properties exploited in engineering.

Circle:

Definition: Set of points equidistant from center. Standard equation: (x – h)² + (y – k)² = r² where (h, k) is center and r is radius. General form: x² + y² + 2gx + 2fy + c = 0, center (-g, -f), radius √(g² + f² – c).

Parabola:

Definition: Set of points equidistant from focus and directrix. Standard equations: y² = 4ax (opens right), x² = 4ay (opens up). Vertex at origin, focus at (a, 0) or (0, a), directrix x = -a or y = -a.

Ellipse:

Definition: Set of points where sum of distances from two foci is constant. Standard equation: x²/a² + y²/b² = 1 (a > b). Semi-major axis a, semi-minor axis b. Eccentricity e = √(1 – b²/a²) where 0 < e < 1.

Hyperbola:

Definition: Set of points where difference of distances from two foci is constant. Standard equation: x²/a² – y²/b² = 1. Eccentricity e = √(1 + b²/a²) where e > 1. Asymptotes: y = ±(b/a)x.

🔬 Real-World Applications in AI & Tech:

Satellite Communication: Parabolic reflectors focus signals at focal point. Satellite orbits follow elliptical paths (Kepler’s laws). Geostationary satellites use circular orbits.

Computer Vision: Ellipse detection for object recognition. Conic fitting for camera calibration. Circle detection (Hough transform) identifies circular features.

Physics Simulations: Projectile motion follows parabolic trajectory. Planetary orbits are elliptical. Hyperbolic trajectories for escape velocity calculations.

Optimization: Level curves of quadratic functions are conics. Ellipsoid method for convex optimization. Trust regions use elliptical constraints.

3. Three-Dimensional Geometry – Expanding to Space

Introduction: 3D geometry extends 2D concepts into space. Essential for computer graphics, robotics, molecular modeling, and any spatial reasoning. Coordinates (x, y, z) locate points in 3D space.

Distance Formula in 3D:

d = √[(x₂-x₁)² + (y₂-y₁)² + (z₂-z₁)²]

Section Formula: Point dividing line joining (x₁,y₁,z₁) and (x₂,y₂,z₂) in ratio m:n:

((mx₂+nx₁)/(m+n), (my₂+ny₁)/(m+n), (mz₂+nz₁)/(m+n))

🔬 Applications:

3D Graphics & Gaming: All 3D rendering requires coordinate transformations. Camera position, object locations, lighting calculations use 3D coordinates.

Robotics: Forward kinematics maps joint angles to end-effector position in 3D space. Path planning navigates 3D environments avoiding obstacles.

UNIT V: Statistics and Probability – Quantifying Uncertainty

1. Statistics – Describing Data

Introduction: Statistics provides tools to collect, analyze, and interpret data. Dispersion measures quantify data spread and variability. Essential for understanding data distributions, detecting outliers, and assessing model performance in machine learning.

Measures of Dispersion:

Range: Difference between maximum and minimum values. Simple but sensitive to outliers. Range = Max – Min.

Mean Deviation: Average absolute deviation from mean. MD = Σ|xᵢ – x̄|/n. Provides sense of typical deviation.

Variance: Average squared deviation from mean. For population: σ² = Σ(xᵢ – μ)²/N. For sample: s² = Σ(xᵢ – x̄)²/(n-1). Squaring penalizes larger deviations more heavily.

Standard Deviation: Square root of variance. σ = √(σ²). Same units as original data. For normal distribution, ~68% data within 1σ, ~95% within 2σ, ~99.7% within 3σ.

🔬 Real-World Applications:

Machine Learning: Feature scaling uses mean and standard deviation for normalization: z = (x – μ)/σ. Model evaluation uses variance to assess prediction consistency.

Quality Control: Six Sigma methodology aims for ≤3.4 defects per million, requiring processes within 6σ of target. Control charts monitor process variation.

Financial Analysis: Volatility measured by standard deviation of returns. Risk assessment compares return variance across investments.

2. Probability – Mathematics of Randomness

Introduction: Probability quantifies uncertainty and likelihood. Foundation of statistics, machine learning, cryptography, and decision theory. Enables reasoning about random events and making predictions under uncertainty.

Core Concepts:

Sample Space (S): Set of all possible outcomes. For coin flip: S = {H, T}. For dice: S = {1, 2, 3, 4, 5, 6}.

Event: Subset of sample space. Simple event has one outcome. Compound event has multiple outcomes.

Types of Events: Mutually exclusive (can’t occur simultaneously), Exhaustive (cover entire sample space), Independent (occurrence of one doesn’t affect other), Complementary (A and A’ partition sample space).

Probability Axioms:

• 0 ≤ P(A) ≤ 1 for any event A

• P(S) = 1 (certainty)

• P(∅) = 0 (impossibility)

• For mutually exclusive events: P(A ∪ B) = P(A) + P(B)

Probability Rules:

• Addition Rule: P(A ∪ B) = P(A) + P(B) – P(A ∩ B)

• Complement Rule: P(A’) = 1 – P(A)

• Multiplication Rule: P(A ∩ B) = P(A) · P(B|A)

• For independent events: P(A ∩ B) = P(A) · P(B)

🔬 Real-World Applications in AI & Tech:

Machine Learning Classification: Probabilistic classifiers output P(class|features). Naive Bayes assumes feature independence. Softmax converts scores to probabilities: P(yᵢ) = e^(zᵢ)/Σe^(zⱼ).

Information Theory: Entropy H(X) = -Σ P(x)log P(x) measures uncertainty. Used in decision trees (information gain) and compression algorithms.

Cryptography: Random number generation for keys. Probability of guessing key determines security level. Birthday paradox affects hash collision probability.

Reliability Engineering: System reliability = P(system works) = Π P(component works) for series. Failure analysis uses probability distributions.

ADVANCED TOPICS

Relations and Functions (Advanced)

Types of Relations: Understanding relation properties is crucial for database design, graph theory, and equivalence classes in algorithms.

Reflexive: Every element related to itself. xRx for all x. Example: “equals” relation. Used in defining equivalence.

Symmetric: If xRy then yRx. Example: “is sibling of”. Important in undirected graphs.

Transitive: If xRy and yRz then xRz. Example: “greater than”. Crucial for ordering and reachability.

Equivalence Relation: Reflexive + Symmetric + Transitive. Partitions set into equivalence classes. Used in classification and clustering.

One-to-One (Injective): Different inputs map to different outputs. f(x₁) ≠ f(x₂) if x₁ ≠ x₂. Ensures invertibility. Hash functions aim for injectivity.

Onto (Surjective): Every element in codomain is mapped. For every y, exists x such that f(x) = y. Ensures full coverage.

Bijective: Both one-to-one and onto. Establishes one-to-one correspondence. Invertible functions are bijective.

Inverse Trigonometric Functions

Principal Value Ranges:

• sin⁻¹(x): Domain [-1,1], Range [-π/2, π/2]

• cos⁻¹(x): Domain [-1,1], Range [0, π]

• tan⁻¹(x): Domain ℝ, Range (-π/2, π/2)

Key Properties:

• sin⁻¹(sin x) = x if x ∈ [-π/2, π/2]

• sin(sin⁻¹ x) = x if x ∈ [-1, 1]

• sin⁻¹(-x) = -sin⁻¹(x)

• cos⁻¹(-x) = π – cos⁻¹(x)

• tan⁻¹(-x) = -tan⁻¹(x)

Matrices – Linear Transformations

Introduction: Matrices represent linear transformations, systems of equations, and multi-dimensional data. Fundamental in computer graphics (transformations), machine learning (data and weights), and quantum computing (state operations).

Matrix Types: Row matrix (1×n), Column matrix (m×1), Square matrix (n×n), Diagonal matrix (non-zero only on diagonal), Identity matrix I (ones on diagonal), Zero matrix O, Symmetric (A = Aᵀ), Skew-symmetric (A = -Aᵀ).

Matrix Operations:

• Addition: (A + B)ᵢⱼ = Aᵢⱼ + Bᵢⱼ

• Scalar Multiplication: (kA)ᵢⱼ = k·Aᵢⱼ

• Multiplication: (AB)ᵢⱼ = Σₖ Aᵢₖ Bₖⱼ

• Transpose: (Aᵀ)ᵢⱼ = Aⱼᵢ

• (AB)ᵀ = BᵀAᵀ

• Matrix multiplication is NOT commutative: AB ≠ BA generally

• (AB)C = A(BC) [Associative]

• A(B + C) = AB + AC [Distributive]

🔬 Applications:

Computer Graphics: Transformation matrices for rotation, scaling, translation. 3D graphics pipeline uses 4×4 matrices for homogeneous coordinates.

Neural Networks: Weight matrices W connect layers. Forward pass: a⁽ˡ⁾ = σ(W⁽ˡ⁾a⁽ˡ⁻¹⁾ + b⁽ˡ⁾). Entire network is composition of matrix operations.

Image Processing: Images as matrices. Convolution filters are small matrices. Operations like blurring, sharpening use matrix multiplication.

Determinants – Matrix Properties

Determinant Formulas:

• 2×2: |A| = ad – bc for A = [[a,b],[c,d]]

• 3×3: Expand along row/column using minors and cofactors

• Properties: |AB| = |A||B|, |Aᵀ| = |A|, |kA| = kⁿ|A| for n×n matrix

• |A⁻¹| = 1/|A|

• If |A| = 0, matrix is singular (non-invertible)

Applications: Determinant measures scaling factor of linear transformation. Zero determinant means transformation collapses dimension. Used in solving linear systems (Cramer’s rule), computing areas/volumes, and eigenvalue problems.

Continuity and Differentiability

Continuity: Function f is continuous at x=a if lim(x→a) f(x) = f(a). Intuitively, can draw graph without lifting pencil. Critical for optimization convergence and numerical stability.

Differentiability: Function is differentiable at point if derivative exists there. Differentiability implies continuity, but not vice versa. |x| is continuous everywhere but not differentiable at x=0.

Chain Rule: For y = f(u) and u = g(x):

dy/dx = (dy/du) · (du/dx) = f'(g(x)) · g'(x)

Essential for backpropagation in neural networks, where gradients flow backward through composed functions.

Applications of Derivatives – Optimization

Increasing/Decreasing: f'(x) > 0 → f increasing. f'(x) < 0 → f decreasing. Critical points where f'(x) = 0 or undefined.

Maxima/Minima: First Derivative Test: f'(x) changes from + to – at local maximum, – to + at local minimum. Second Derivative Test: f”(x) < 0 at local max, f''(x) > 0 at local min.

🔬 Applications:

Machine Learning Optimization: Finding model parameters that minimize loss function. Gradient descent: θ := θ – α∇J(θ). Second derivatives (Hessian) used in Newton’s method for faster convergence.

Resource Optimization: Maximizing profit, minimizing cost, optimal inventory levels. Constraint optimization uses Lagrange multipliers.

Integration – Accumulation and Area

Introduction: Integration is inverse of differentiation. Computes accumulated change, areas, volumes, and totals. Essential for probability distributions, physics simulations, and computing expectations in machine learning.

Indefinite Integration: ∫f(x)dx = F(x) + C where F'(x) = f(x). Represents family of antiderivatives. Constant C captures arbitrary vertical shift.

Definite Integration: ∫ₐᵇf(x)dx represents signed area under curve from a to b. Gives numerical value (no + C).

Integration Techniques:

• Substitution: ∫f(g(x))g'(x)dx = ∫f(u)du where u = g(x)

• Integration by Parts: ∫u dv = uv – ∫v du

• Partial Fractions: Decompose rational functions

Fundamental Theorem of Calculus:

If F'(x) = f(x), then ∫ₐᵇf(x)dx = F(b) – F(a)

Properties:

• ∫ₐᵇf(x)dx = -∫ᵦₐf(x)dx

• ∫ₐᵇf(x)dx + ∫ᵦ꜀f(x)dx = ∫ₐ꜀f(x)dx

• ∫ₐᵇ[f(x) ± g(x)]dx = ∫ₐᵇf(x)dx ± ∫ₐᵇg(x)dx

• ∫ₐᵇkf(x)dx = k∫ₐᵇf(x)dx

🔬 Real-World Applications in AI & Tech:

Probability and Statistics: Probability density functions integrate to 1: ∫₋∞^∞ f(x)dx = 1. Expected value E[X] = ∫xf(x)dx. Cumulative distribution F(x) = ∫₋∞^x f(t)dt.

Physics Simulations: Position from velocity: x(t) = ∫v(t)dt. Work W = ∫F·ds. Game engines integrate equations of motion for realistic movement.

Computer Graphics: Ray tracing integrates light along paths. Volume rendering integrates density along rays. Area computation for irregular shapes.

Signal Processing: Fourier transform: F(ω) = ∫₋∞^∞ f(t)e^(-iωt)dt converts time domain to frequency domain. Convolution integral combines signals.

Differential Equations – Modeling Dynamic Systems

Introduction: Differential equations relate functions to their derivatives. Model systems where rate of change depends on current state. Fundamental in physics, biology, economics, and control systems. Most natural phenomena described by differential equations.

Classification: Order = highest derivative. Degree = power of highest derivative. Linear vs. Nonlinear. Ordinary (ODE, one variable) vs. Partial (PDE, multiple variables).

General vs. Particular Solution: General solution contains arbitrary constants. Particular solution satisfies initial/boundary conditions.

Solution Methods:

• Separation of Variables: Rearrange to f(y)dy = g(x)dx, then integrate both sides

• Homogeneous Equations: dy/dx = f(y/x), substitute v = y/x

• Linear First Order: dy/dx + P(x)y = Q(x)

Solution: y·e^(∫P dx) = ∫Q·e^(∫P dx) dx + C

Example Applications:

• Population Growth: dN/dt = rN (exponential growth)

• Newton’s Cooling: dT/dt = -k(T – Tₑₙᵥ)

• RC Circuit: dQ/dt + Q/(RC) = V/R

🔬 Real-World Applications in AI & Tech:

Neural ODEs: Treat neural networks as continuous transformations: dh/dt = f(h(t), t, θ). More memory efficient than traditional networks. Used in time-series modeling and continuous normalizing flows.

Physics Simulation: Newton’s second law F = ma becomes differential equation: d²x/dt² = F/m. Numerical integration (Euler, Runge-Kutta) solves for trajectories.

Control Systems: PID controller dynamics described by differential equations. State-space models: dx/dt = Ax + Bu. Stability analysis uses eigenvalues.

Epidemiology: SIR model uses coupled DEs: dS/dt = -βSI, dI/dt = βSI – γI, dR/dt = γI. COVID-19 modeling uses variants of these equations.

Vectors – Magnitude and Direction

Introduction: Vectors represent quantities with both magnitude and direction (velocity, force, displacement). Contrast with scalars (mass, temperature). Foundation of physics, computer graphics, machine learning, and robotics.

Vector Representation: In 2D: v = xi + yj. In 3D: v = xi + yj + zk where i, j, k are unit vectors along axes. Position vector: r = xi + yj + zk locates point (x,y,z) from origin.

Magnitude: |v| = √(x² + y² + z²). Unit vector: v̂ = v/|v| has magnitude 1.

Direction Cosines: If v makes angles α, β, γ with x, y, z axes, then cos α = x/|v|, cos β = y/|v|, cos γ = z/|v|. Note: cos²α + cos²β + cos²γ = 1.

Vector Operations:

• Addition: a + b = (a₁+b₁)i + (a₂+b₂)j + (a₃+b₃)k

• Scalar Multiplication: ka = ka₁i + ka₂j + ka₃k

Dot Product (Scalar Product):

a · b = |a||b|cos θ = a₁b₁ + a₂b₂ + a₃b₃

• Properties: commutative, distributive

• a · b = 0 if vectors perpendicular

• Projection of a on b: (a · b/|b|)b̂

Cross Product (Vector Product):

a × b = |i j k |

|a₁ a₂ a₃|

|b₁ b₂ b₃|

• Magnitude: |a × b| = |a||b|sin θ

• Direction: perpendicular to both a and b (right-hand rule)

• a × b = -b × a (anti-commutative)

• a × b = 0 if vectors parallel

🔬 Real-World Applications in AI & Tech:

Machine Learning: Feature vectors represent data points in high-dimensional space. Cosine similarity a·b/(|a||b|) measures document similarity. Gradient ∇f is vector pointing in direction of steepest ascent.

Computer Graphics: Normal vectors define surface orientation for lighting. Cross product finds perpendicular vectors for coordinate systems. Dot product tests visibility and angles.

Physics & Robotics: Torque τ = r × F. Angular momentum L = r × p. Velocity vectors for motion planning. Force vectors in statics and dynamics.

Recommendation Systems: Items and users as vectors in latent space. Recommendations based on vector similarity. Collaborative filtering uses vector operations.

Three-Dimensional Geometry (Advanced)

Line Equations:

• Vector form: r = a + λb (point a, direction b)

• Cartesian form: (x-x₁)/l = (y-y₁)/m = (z-z₁)/n

where (l,m,n) are direction ratios

Angle Between Lines:

cos θ = |l₁l₂ + m₁m₂ + n₁n₂|/√(l₁²+m₁²+n₁²)√(l₂²+m₂²+n₂²)

Shortest Distance Between Skew Lines:

d = |(a₂-a₁)·(b₁×b₂)|/|b₁×b₂|

🔬 Applications:

Ray Tracing: Rays as lines in 3D space. Intersection with surfaces determines rendering. Reflection/refraction follow geometric laws.

Collision Detection: Minimum distance between objects. Line-sphere, line-plane intersections. Critical for games and simulations.

Linear Programming – Optimization Under Constraints

Introduction: Linear programming optimizes linear objective function subject to linear constraints. Widely used in operations research, resource allocation, scheduling, and supply chain management. Many real-world optimization problems are linear or can be approximated as such.

Standard Form: Maximize (or Minimize) Z = c₁x₁ + c₂x₂ + … + cₙxₙ subject to constraints a₁₁x₁ + a₁₂x₂ + … ≤ b₁, etc., and x₁, x₂, … ≥ 0 (non-negativity).

Feasible Region: Set of all points satisfying all constraints. In 2D, typically a polygon. Optimal solution occurs at vertex (corner point) of feasible region.

Graphical Method: Plot constraints, identify feasible region, evaluate objective function at corner points, select optimal value.

🔬 Real-World Applications in AI & Tech:

Resource Allocation: Allocate limited resources (CPU, memory, bandwidth) to maximize throughput or minimize cost. Cloud computing uses LP for VM placement.

Machine Learning: Support Vector Machines formulated as quadratic programming (extension of LP). Feature selection as integer linear program. Training some models reduces to LP.

Supply Chain Optimization: Minimize transportation costs while meeting demand. Production planning, inventory management. Simplex algorithm efficiently solves large-scale LPs.

Network Flow: Maximum flow, minimum cost flow problems. Traffic routing, communication networks. Internet routing protocols use LP principles.

Probability (Advanced) – Conditional and Bayesian

Introduction: Advanced probability concepts handle dependencies between events. Conditional probability and Bayes’ theorem are foundational for machine learning, particularly in classification, inference, and decision-making under uncertainty.

Conditional Probability: P(A|B) = P(A ∩ B)/P(B) is probability of A given B occurred. Reads “probability of A given B”. Represents updated belief after observing evidence.

Multiplication Theorem: P(A ∩ B) = P(A) · P(B|A) = P(B) · P(A|B). For independent events, P(A ∩ B) = P(A) · P(B) since P(B|A) = P(B).

🎯 Key Takeaways for AI & Tech Innovation

Connecting Mathematics to Modern Technology

The mathematical concepts we’ve explored form the theoretical foundation of modern artificial intelligence and technological innovation. Here’s how they interconnect:

Machine Learning Pipeline: Linear algebra (matrices, vectors) represents data and model parameters. Calculus (derivatives, gradients) enables optimization through gradient descent. Probability theory handles uncertainty and makes predictions. Statistics evaluates model performance.

Deep Learning: Neural networks are compositions of matrix multiplications and nonlinear activations. Backpropagation applies chain rule through network layers. Optimization uses advanced calculus (Adam, momentum methods). Regularization applies probability theory.

Computer Vision: Images as matrices. Convolution as matrix operation. Edge detection using gradients. Geometric transformations via matrix multiplication. Object detection using probability distributions.

Natural Language Processing: Words as vectors (embeddings). Attention mechanisms use dot products. Transformer positional encodings use trigonometric functions. Language models compute probability distributions.

Robotics & Control: Kinematics uses geometry and trigonometry. Dynamics modeled with differential equations. Control systems use calculus and linear algebra. Path planning applies optimization and graph theory.

Cryptography & Security: Number theory (primes, modular arithmetic). Probability for key generation. Complexity theory determines security levels. Algebraic structures (groups, fields) underlie modern encryption.

🚀 Practical Advice for Students:

Master the Fundamentals: Don’t just memorize formulas. Understand the intuition behind concepts. Practice deriving results from first principles. This deeper understanding enables innovation.

Connect Theory to Practice: Implement algorithms from scratch. Visualize mathematical concepts through code. Build projects that apply multiple mathematical domains simultaneously.

Embrace Computational Thinking: Use tools like Python (NumPy, SciPy, SymPy), MATLAB, or Mathematica. Numerical computation complements analytical mathematics. Simulation validates theoretical understanding.

Study Interdisciplinary Applications: Follow how mathematics appears in research papers. Read about latest AI breakthroughs and identify mathematical components. Mathematics is the universal language of science and technology.

Develop Problem-Solving Skills: Mathematics trains rigorous logical thinking. Proof techniques develop careful reasoning. Optimization problems teach systematic approaches. These skills transfer across all technical domains.

Mathematics → AI/Tech Innovation Pipeline

Linear Algebra

→ Neural Networks

Calculus

→ Optimization

Probability

→ Predictions

Statistics

→ Evaluation

Geometry

→ Computer Vision

Differential Equations

→ Simulations

📚 Summary and Future Directions

Congratulations! You’ve covered comprehensive mathematical fundamentals essential for AI and technology careers. This knowledge forms the bedrock upon which advanced topics are built.

Next Steps in Your Mathematical Journey:

Advanced Linear Algebra: Eigenvalues/eigenvectors, singular value decomposition (SVD), matrix factorizations. Critical for PCA, recommendation systems, and understanding neural network behavior.

Multivariable Calculus: Partial derivatives, multiple integrals, vector calculus, gradient/divergence/curl. Essential for understanding optimization in high dimensions and field theories.

Real Analysis: Rigorous foundations of limits, continuity, convergence. Provides theoretical understanding of why machine learning algorithms converge.

Optimization Theory: Convex optimization, constrained optimization, Lagrange multipliers. Core of training machine learning models and operations research.

Information Theory: Entropy, mutual information, KL divergence. Foundational for understanding loss functions, compression, and communication systems.

Graph Theory: Networks, connectivity, shortest paths. Powers social networks, routing algorithms, and knowledge graphs.

Numerical Methods: Solving equations computationally, numerical integration/differentiation, approximation theory. Bridges continuous mathematics and discrete computation.

“Mathematics is not about numbers, equations, computations, or algorithms: it is about understanding.” – William Paul Thurston

🌟 Final Thoughts:

The mathematics you’ve learned here isn’t just abstract theory—it’s the language in which the future is written. Every breakthrough in artificial intelligence, from GPT models to AlphaGo, from self-driving cars to protein folding prediction, stands on this mathematical foundation.

As you continue your journey in engineering and technology, remember that mathematical thinking—the ability to abstract, formalize, and reason precisely—is your most powerful tool. Whether you’re debugging code, designing algorithms, or pushing the boundaries of what’s possible with AI, you’re applying these mathematical principles.

Keep learning, keep building, and keep innovating. The future of technology is mathematical, and you’re now equipped to shape it.